@inproceedings{narayanswamyscaling,

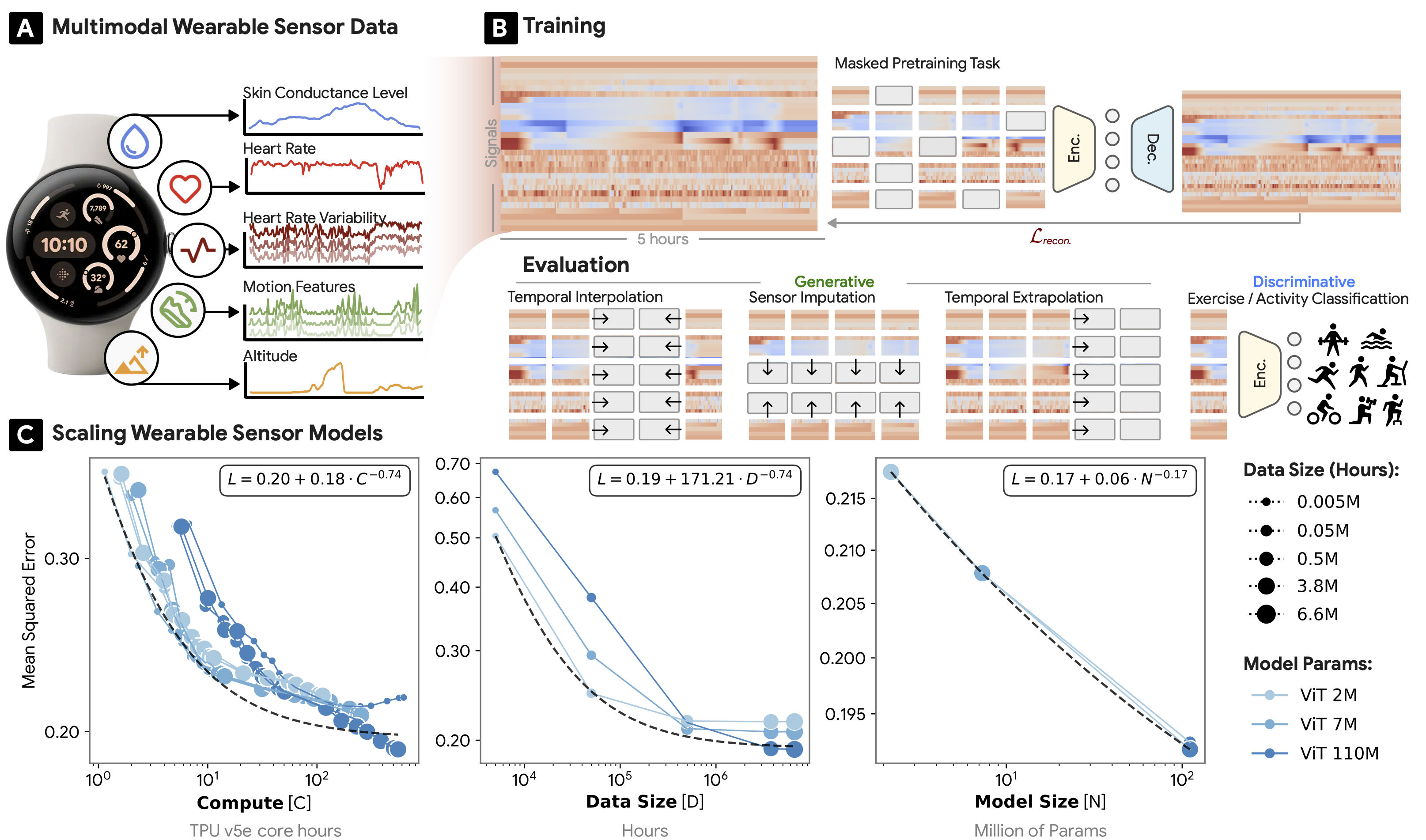

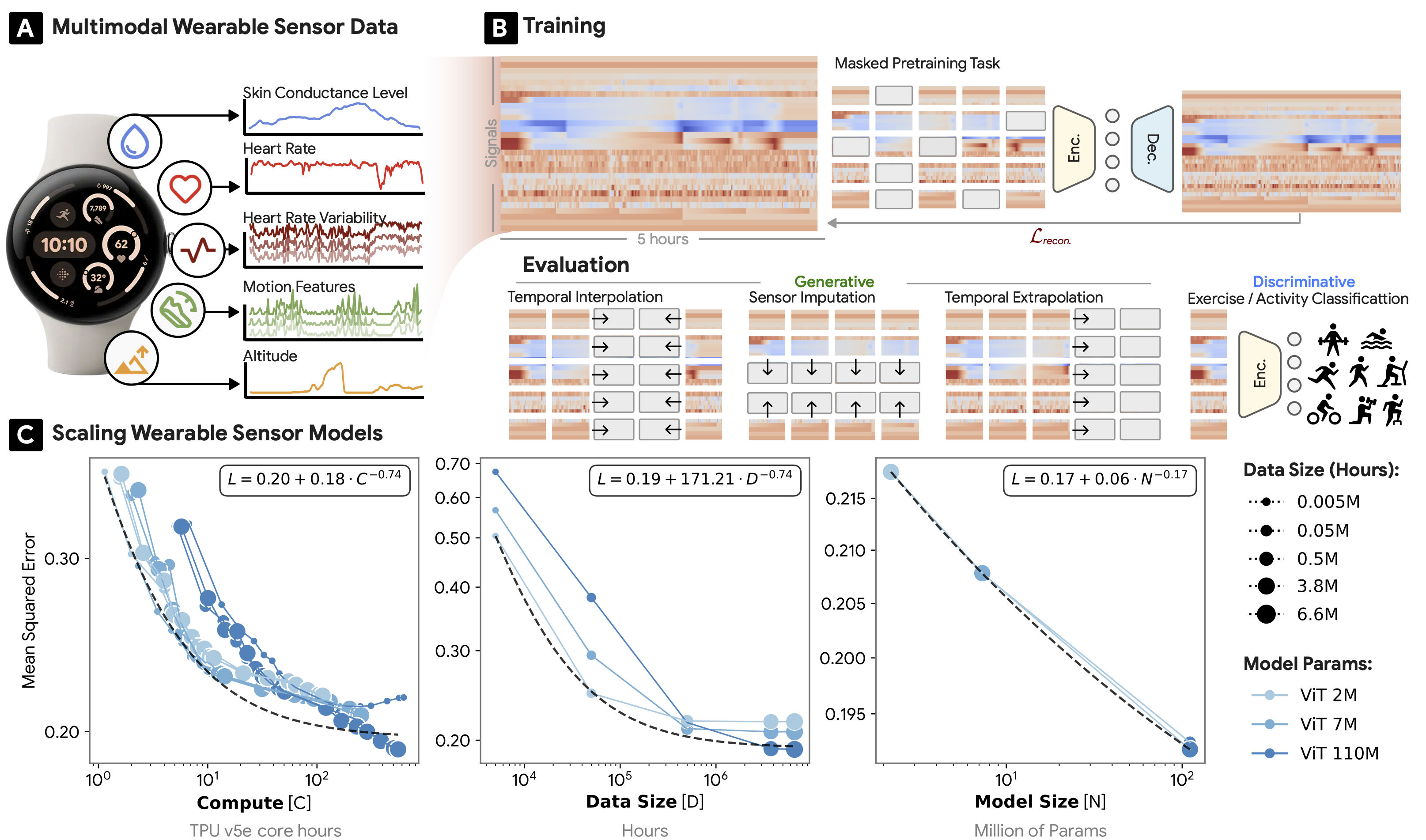

title={Scaling Wearable Foundation Models},

author={Narayanswamy, Girish and Liu, Xin and Ayush, Kumar and Yang, Yuzhe and Xu, Xuhai and Garrison, Jake and Tailor, Shyam A and Sunshine, Jacob and Liu, Yun and Althoff, Tim and others},

booktitle={The Thirteenth International Conference on Learning Representations}

}