@inproceedings{park2021reliable,

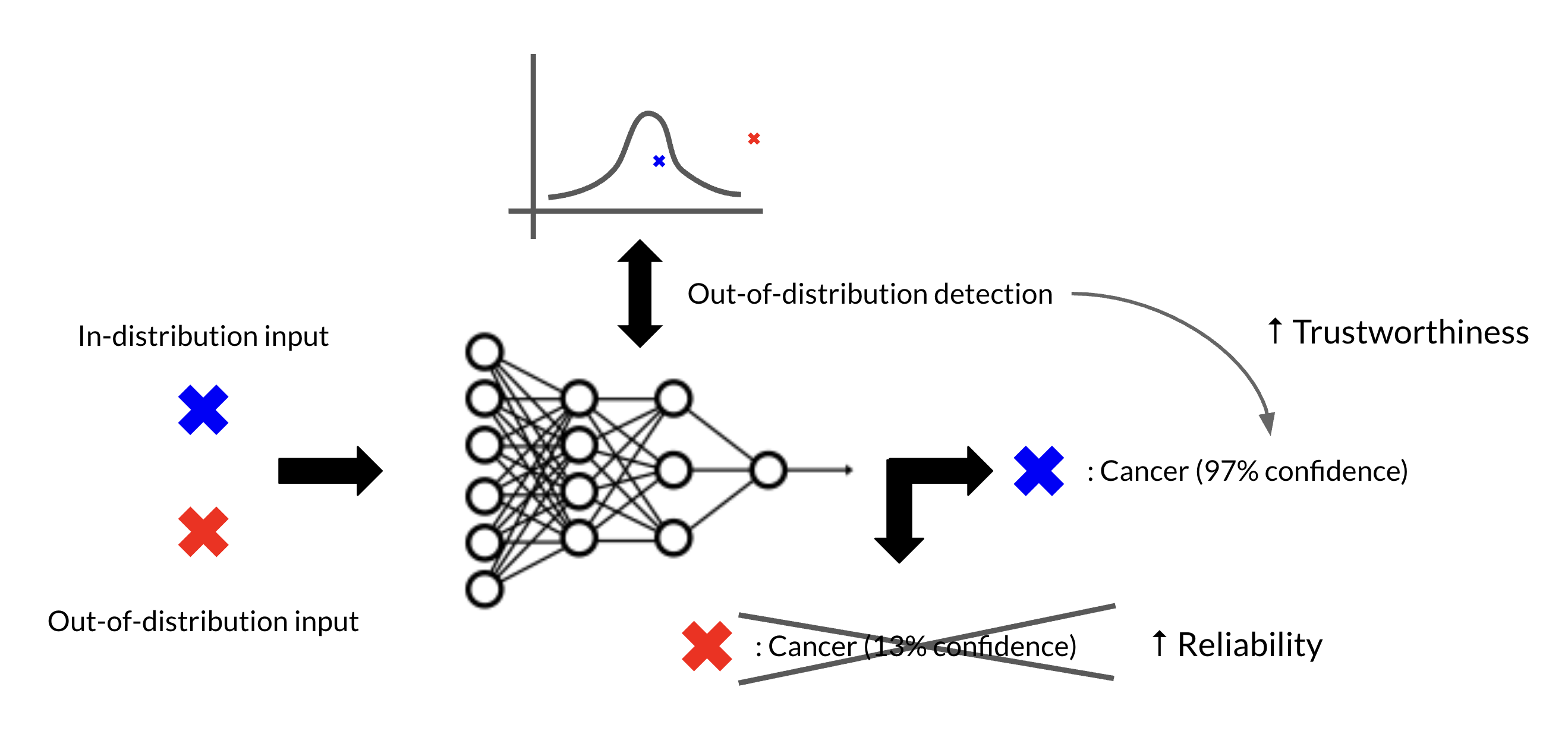

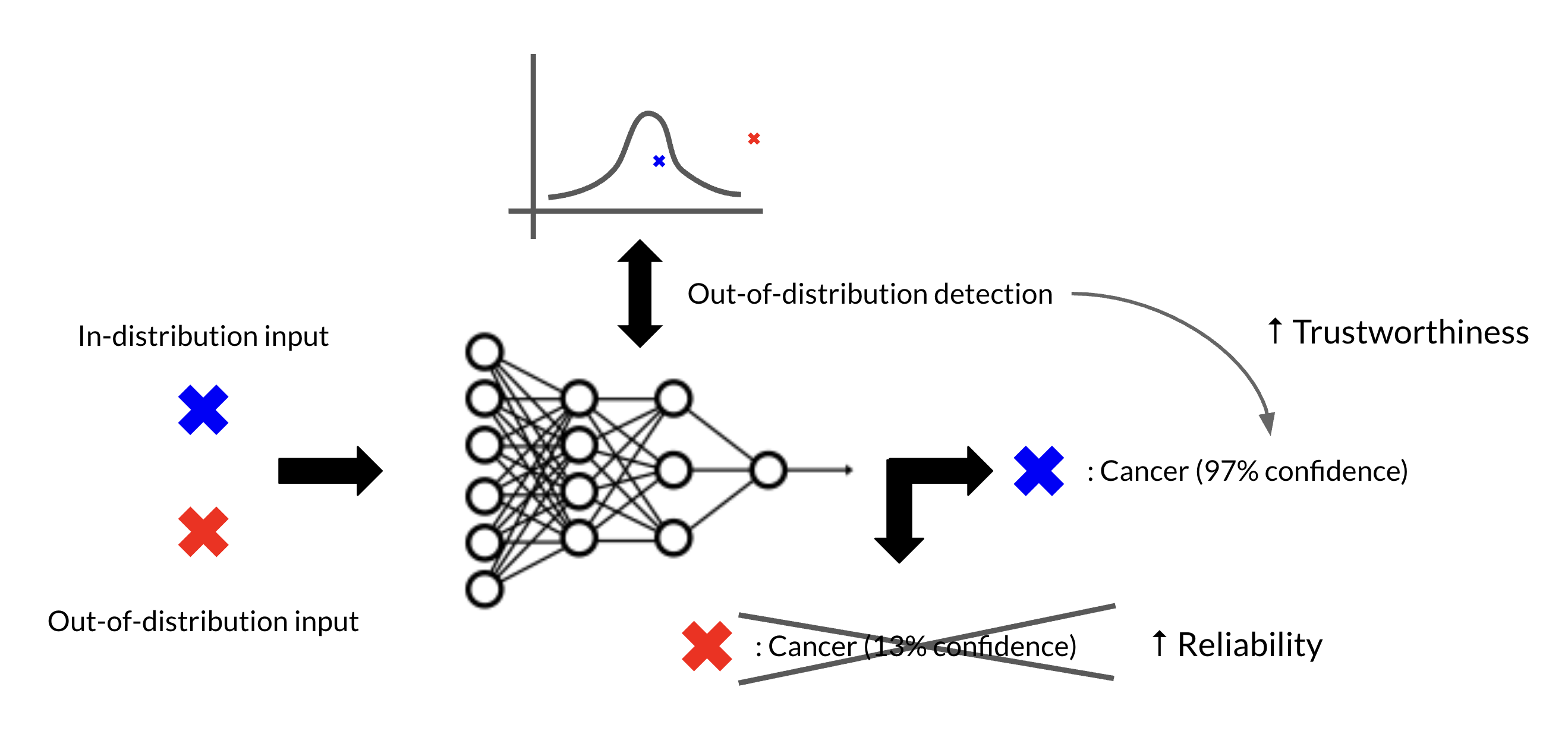

title={Reliable and Trustworthy Machine Learning for Health Using Dataset Shift Detection},

author={Park, Chunjong and Awadalla, Anas and Kohno, Tadayoshi and Patel, Shwetak},

booktitle={Thirty-Fifth Conference on Neural Information Processing Systems},

year={2021}

}