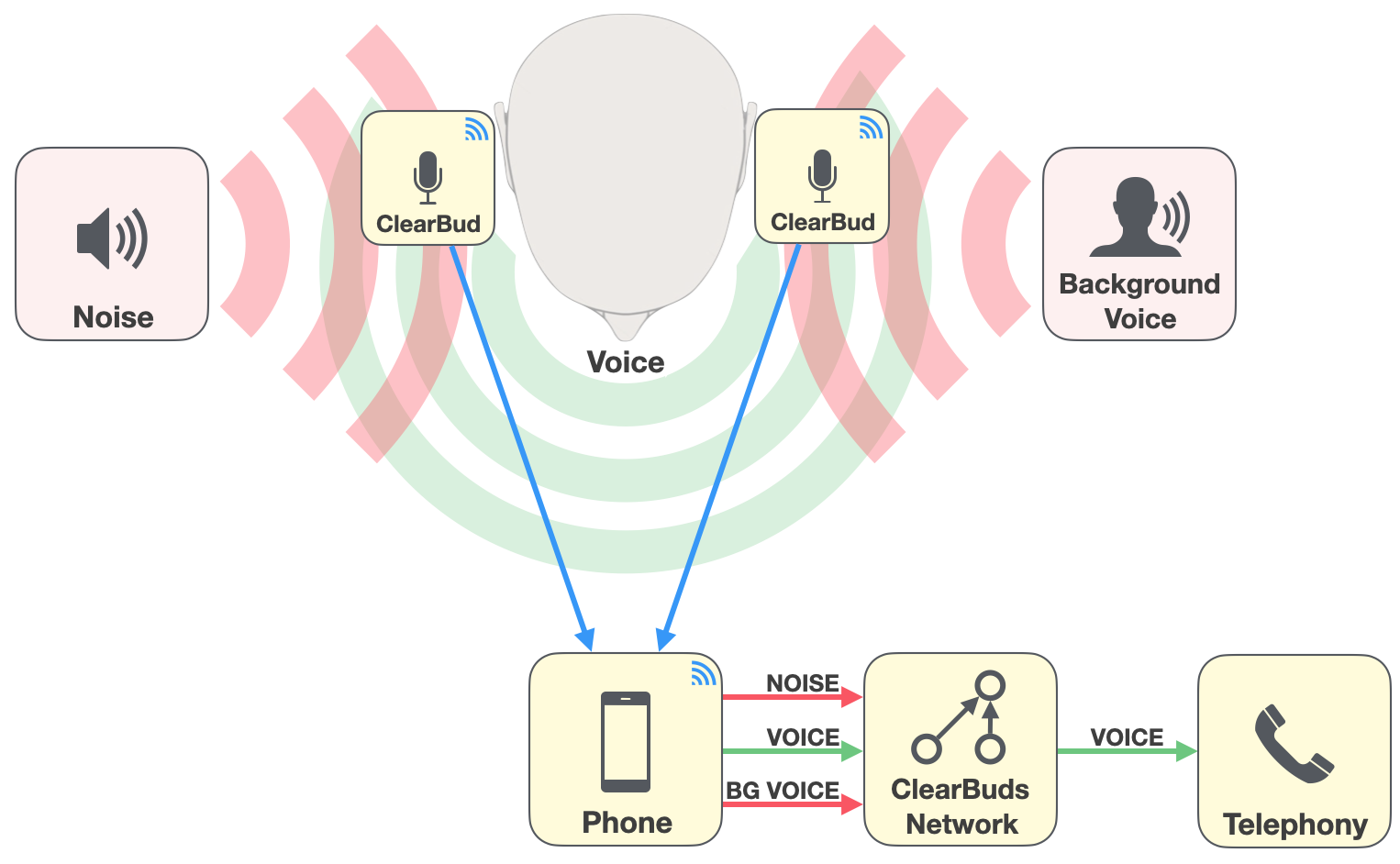

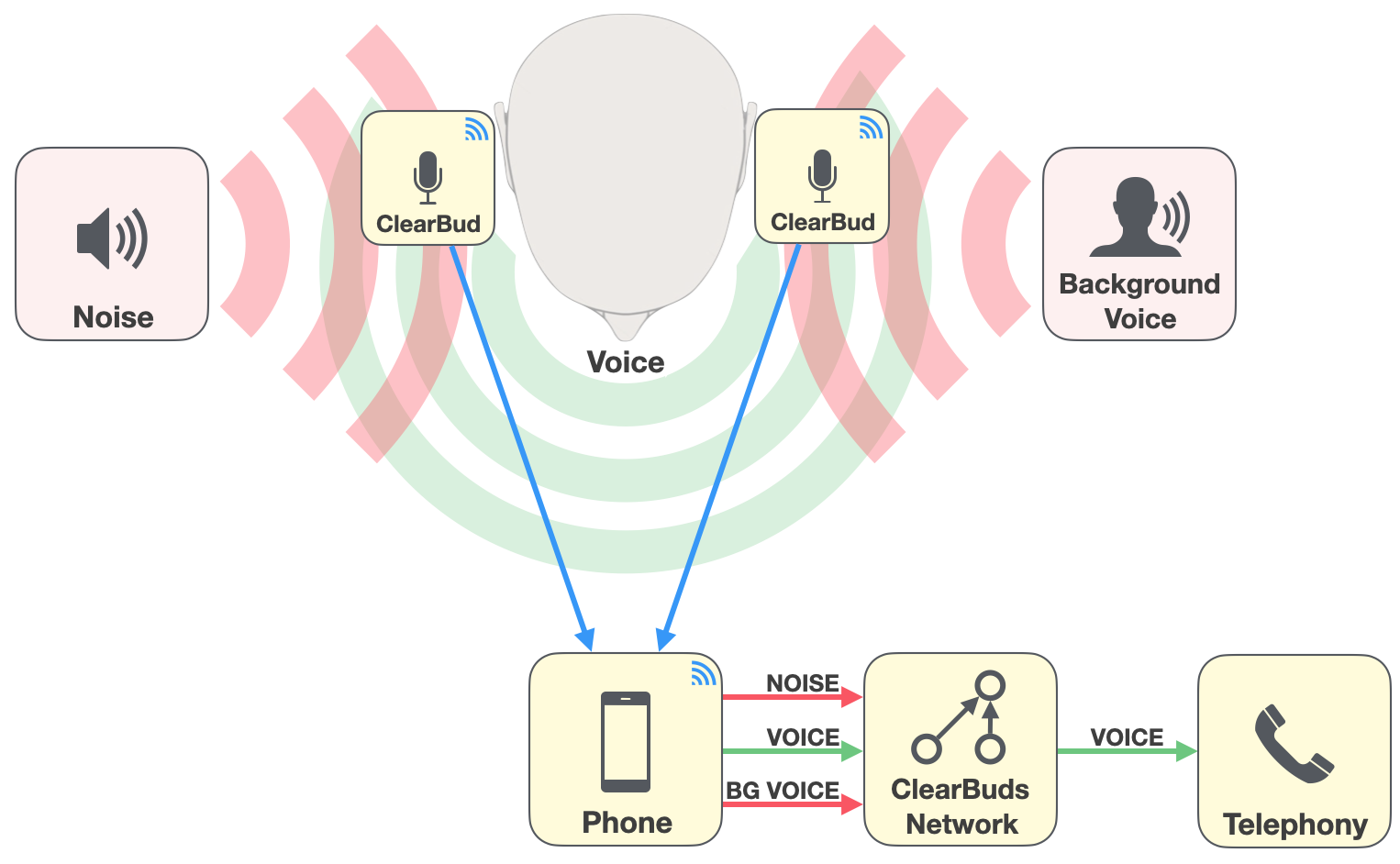

@inproceedings{chatterjee2022clearbuds, title={ClearBuds: wireless binaural earbuds for learning-based speech enhancement}, author={Chatterjee, Ishan and Kim, Maruchi and Jayaram, Vivek and Gollakota, Shyamnath and Kemelmacher, Ira and Patel, Shwetak and Seitz, Steven M}, booktitle={Proceedings of the 20th Annual International Conference on Mobile Systems, Applications and Services}, pages={384--396}, year={2022}}