@misc{paruchuri2023motion,

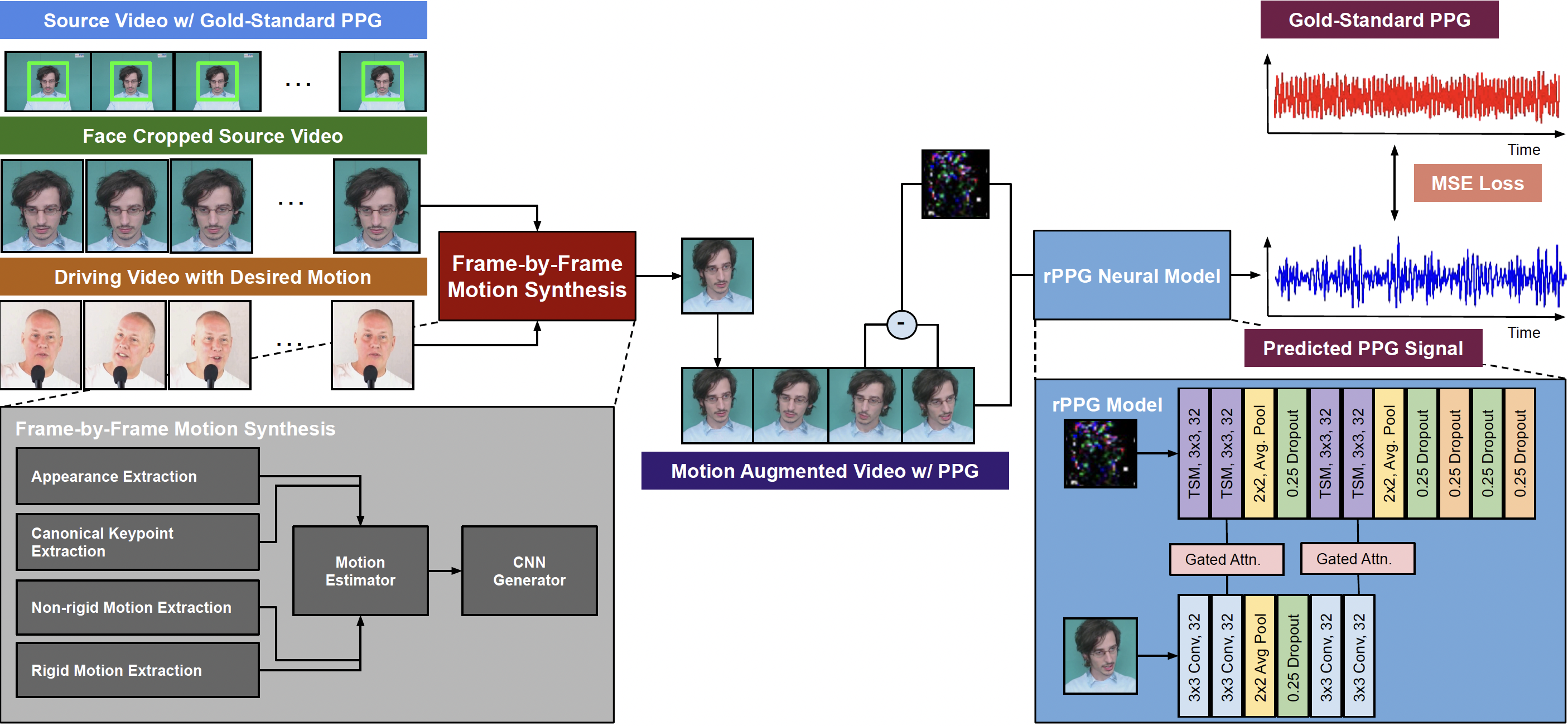

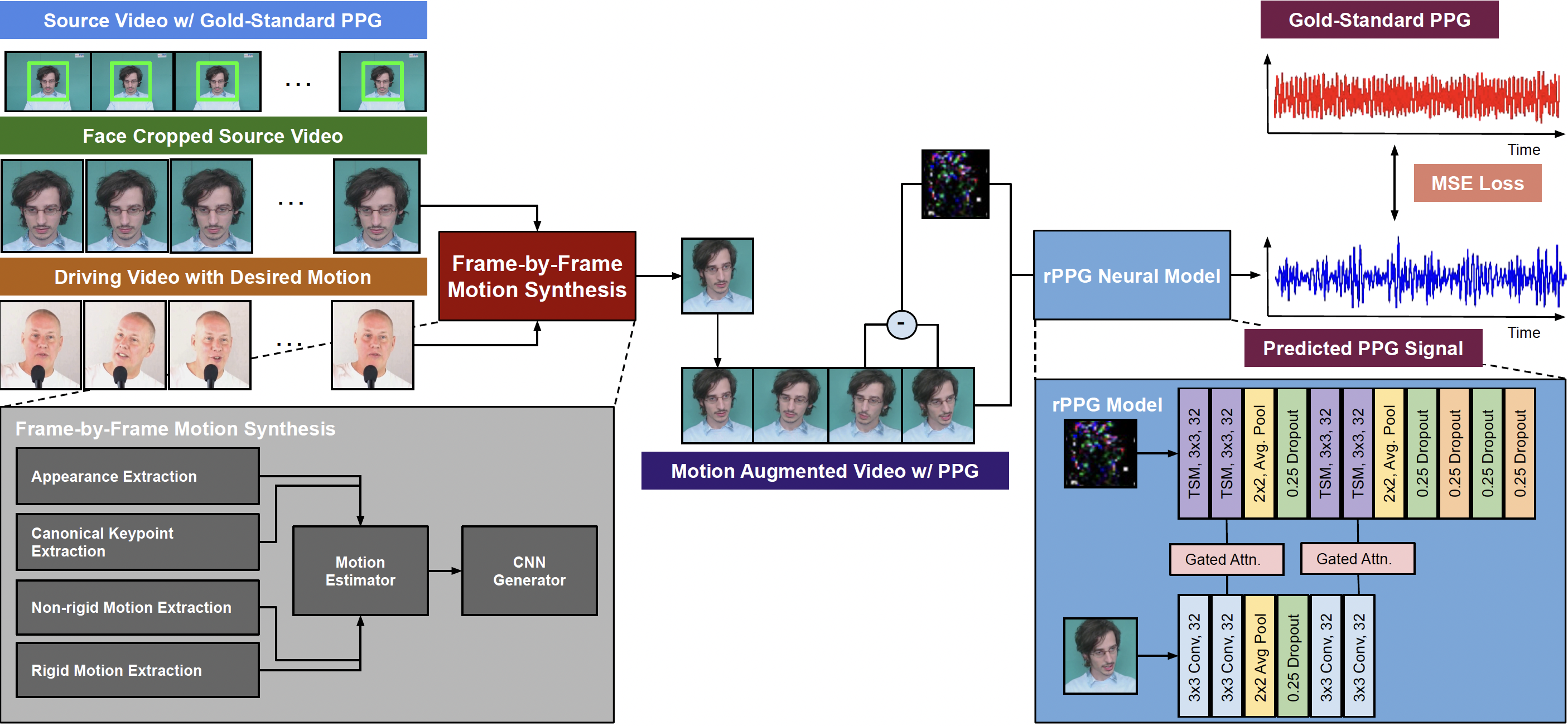

title={Motion Matters: Neural Motion Transfer for Better Camera Physiological Sensing},

author={Akshay Paruchuri and Xin Liu and Yulu Pan and Shwetak Patel and Daniel McDuff and Soumyadip Sengupta},

year={2023},

eprint={2303.12059},

archivePrefix={arXiv},

primaryClass={cs.CV}

}