@inbook{10.1145/3384419.3430782,

author = {Montanari, Alessandro and Sharma, Manuja and Jenkus, Dainius and Alloulah, Mohammed and Qendro, Lorena and Kawsar, Fahim},

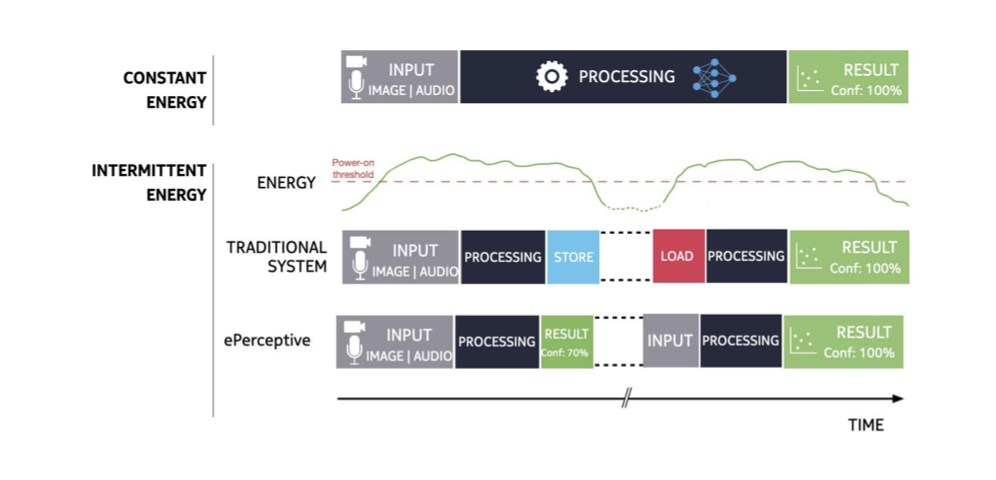

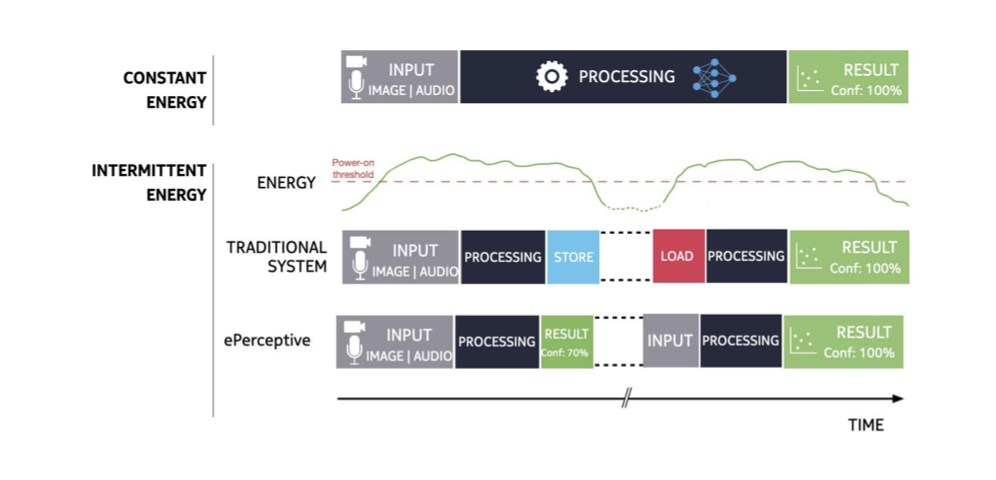

title = {EPerceptive: Energy Reactive Embedded Intelligence for Batteryless Sensors},

year = {2020},

isbn = {9781450375900},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3384419.3430782},

booktitle = {Proceedings of the 18th Conference on Embedded Networked Sensor Systems},

pages = {382–394},

numpages = {13} }